My first name has literally two syllables. And it doesn’t have one of those á, é, í, ó, ú, ü, ñ or any other seizure-inducing accents as well. It is also insanely phonetic. I mean, look at the name “Isla”, it’s pronounced “EYE-la”. Huh??

In spite of my name being so simple to pronounce, here in the US, I have noticed that people get really anxious to call me by my name. I have also noticed that this is usually when I first introduce myself, as it shows how it should really be pronounced.

Let’s see why that’s difficult for them to pronounce. For example, in my mother tongue, we don’t have sounds for “F” or “Z”. We can still pronounce it nevertheless because we usually study English as a second language and we learn words like “food” and “zoo” early on. People in the US are almost never exposed to Indian languages and some of our pronunciations are quite unfamiliar for them. Same for us Indians; we can never, not roll an “R”.

So, I wanted to do something that any other Indian would do/has done. Changing my name, so that the locals in the US can pronounce my name better. A lot of people do it. “Devraj” to “Dave”, “Samyuktha” to “Samantha”, etc.

Now, I wanted to find the closest name that’s familiar to the people in the US. One approach is anxiously finding American names, preferably starting with an “A”. As I did, for a few seconds. The other approach is to use automatic string matching.

Nerd shit. Skip if not interested.

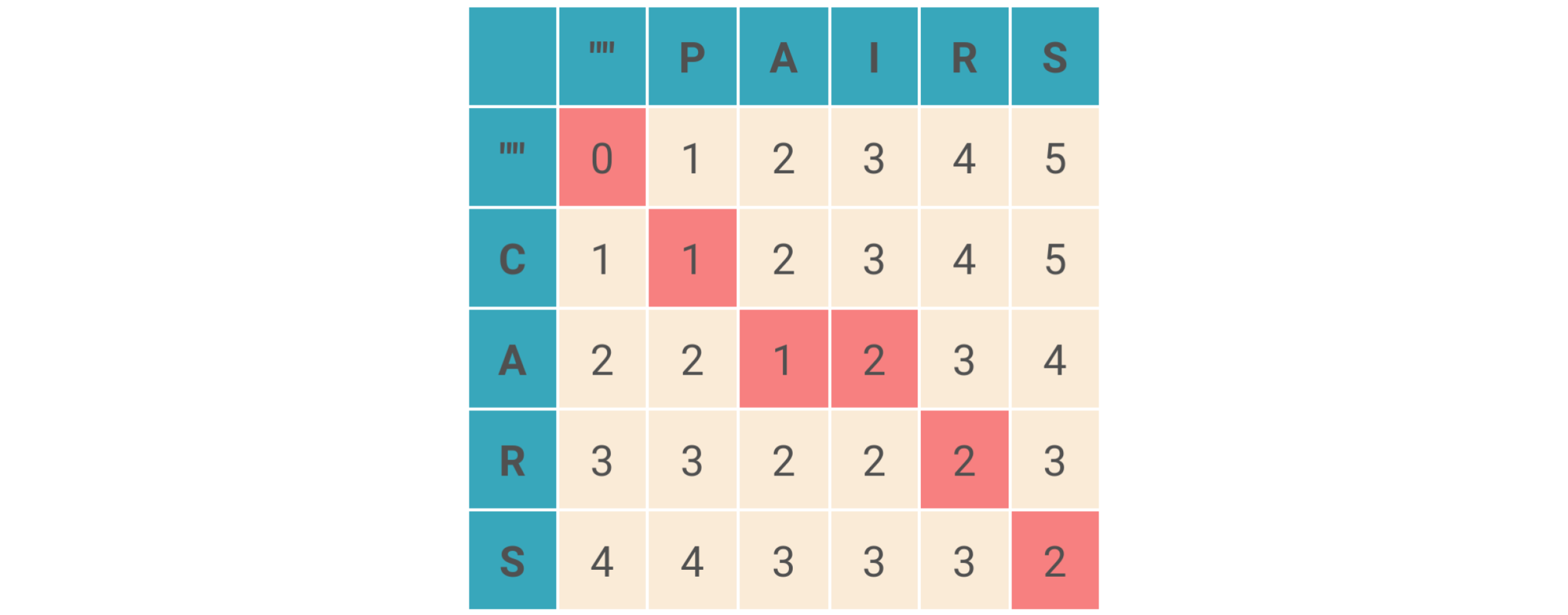

I took the data from the US census available on Kaggle. I selected the first name. Stored them all in a pickle so that I can dynamically load them. (And also, I love pickles. There’s something about it that feels very friendly and inviting). Now, I had studied a little bit about the Levenshtein Distance. The TLDR version of it is, the Levenshtein Distance calculates the similarity index of two strings and quantifies it on a 0 to 1 scale. The problem now boils down to finding the metric with the input string against a vector of strings and selecting the string/s with the maximum similarity index.

I did first implement it using the FuzzyWuzzy package. But for this, I had to calculate the distance of the entire vector and select the argmax, and then handle the ties and exceptions, etc. Then, I found a library that has an optimized implementation of the Levenshtein Distance for a list of strings. Behold! the DiffLib. I enclosed it all in a friendly HTML form with a Flask backend.

You can check out the Live Demo of the project here. If you wanna check out the code, you can find it here.