This was an original research where reinforcement learning was used with a four-legged robot for its autonomous motion along with computer vision and Deep-Q Neural Network.

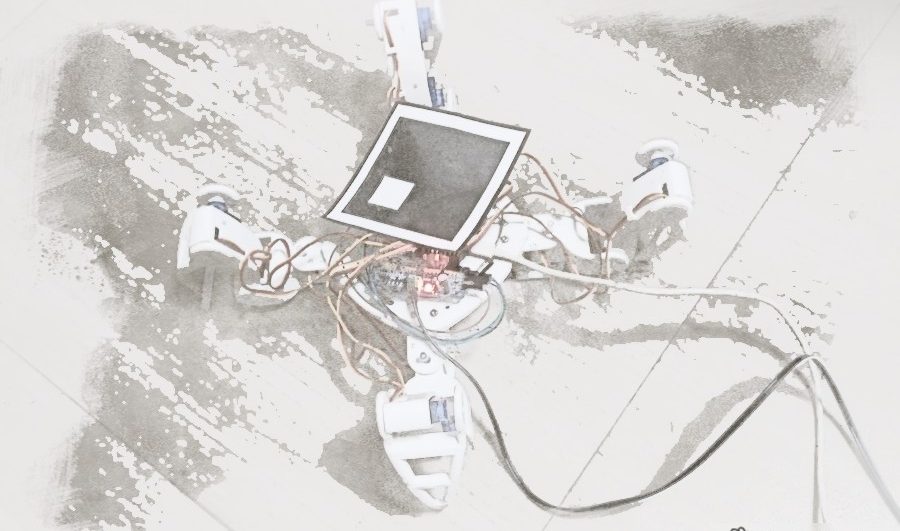

We chose a 4 legged robot which has three servo motors per leg. We calibrated each servo motor and restricted its degree of freedom to a certain set threshold such that each leg has two states and our state/action space has 8 dimensions.

Our objective was to make the robot traverse from a starting point to a destination point and learn the optimal policy for its navigation between two points. The main challenge we faced was to create a partially observable environment for the agent to perform its action in a real world scenario.

We accomplished this by setting up a camera whose field of view consisted of the current position of the robot, its initial and destination points. Instead of consuming the entire frame (which will be dimensionally expensive) as the observation from the environment, we used only the information of the current position of the agent with respect to to its initial and destination coordinates.

We used the ϵ-greedy Q-learning with delayed reward to test the exploration of the agent, the agent was rewarded as it moved forward closer to the destination and punished when it moved backward away from the destination. At each step(t), the agent received information of its state along with the respective reward signal and a neural network was used to predict the Q values for each action, given a state.

[Shrisha Bharadwaj – project partner]

Communication between the interpreter and the agent was achieved with the help of MQTT protocol. The marker to detect the position of the robot was achieved using computer vision.

Check out my GitHub.